Dominique Dumont: Easier Lcdproc package upgrade with automatic configuration merge

Hello

This blog explains how next lcdproc package provide easier upgrader with automatic configuration merge.

Here s the current situation: lcdproc is shipped with several configuration files, including

*

* user is asked once by

* no further question are asked (no ucf style questions). For instance, here s an upgrade from

* check

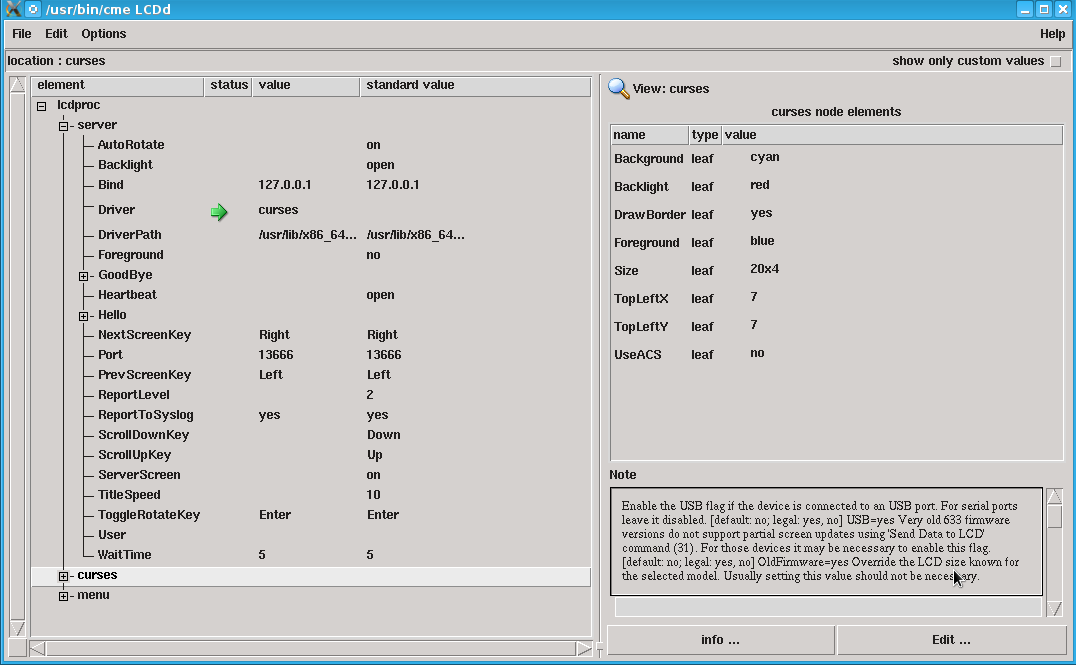

* edit the configuration with a GUI (see Managing Lcdproc configuration with cme for more details) Here s a screenshot of the GUI: More information

* libconfig-model-lcdproc-perl package page. This package provides a configuration model for

More information

* libconfig-model-lcdproc-perl package page. This package provides a configuration model for

* This blog explains how this model is generated from upstream

* How to adapt a package to perform configuration upgrade with Config::Model Next steps Automatic configuration merge can be applied to other packages. But my free time is already taken by the maintenance of Config::Model and the existing models, there s no room for me to take over another package. On the other hand, I will definitely help people who want to provide automatic configuration merge on their packages. Feel free to contact me on:

* config-model-user mailing list

* debian-perl mailing list (where Config::Model is often used to maintain debian package file with

* #debian-perl IRC channel All the best

Tagged: config-model, configuration, debian, Perl, upgrade

/etc/LCDd.conf. This file is modified upstream at every lcdproc release to bring configuration for new lcdproc drivers. On the other hand, this file is always customized to suit the specific hardware of the user s system. So upgrading a package will always lead to a conflict during upgrade. User will always be required to choose whether to use current version or upstream version.

Next version of libconfig-model-lcdproc-perl will propose user whether to perform automatic merge of the configuration: upstream change are taken into account while preserving user change.

The configuration upgrade shown is based on Config::Model can be applied to other package.

Current lcdproc situation

To function properly, lcdproc configuration must always be adapted to suit the user s hardware. On the following upgrade, upstream configuration is often updated so user will often be shown this question:

Configuration file '/etc/LCDd.conf'

==> Modified (by you or by a script) since installation.

==> Package distributor has shipped an updated version.

What would you like to do about it ? Your options are:

Y or I : install the package maintainer's version

N or O : keep your currently-installed version

D : show the differences between the versions

Z : start a shell to examine the situation

The default action is to keep your current version.

*** LCDd.conf (Y/I/N/O/D/Z) [default=N] ?

This question is asked in the middle of an upgrade and can be puzzling for an average user.

Next package with automatic merge

Starting from lcdproc 0.5.6, the configuration merge is handled automatically by the packaging script with the help of Config::Model::Lcdproc.

When lcdproc is upgraded to 0.5.6, the following changes are visible:*

lcdproc depends on libconfig-model-lcdproc-perl* user is asked once by

debconf whether to use automatic configuration upgrades or not.* no further question are asked (no ucf style questions). For instance, here s an upgrade from

lcdproc_0.5.5 to lcdproc_0.5.6:

$ sudo dpkg -i lcdproc_0.5.6-1_amd64.deb (Reading database ... 322757 files and directories currently installed.) Preparing to unpack lcdproc_0.5.6-1_amd64.deb ... Stopping LCDd: LCDd. Unpacking lcdproc (0.5.6-1) over (0.5.5-3) ... Setting up lcdproc (0.5.6-1) ... Changes applied to lcdproc configuration: - server ReportToSyslog: '' -> '1' # use standard value update-rc.d: warning: start and stop actions are no longer supported; falling back to defaults Starting LCDd: LCDd. Processing triggers for man-db (2.6.6-1) ...Note: the automatic upgrade currently applies only to

LCDd.conf. The other configuration files of lcdproc are handled the usual way.

Other benefits

User will also be able to:* check

lcdproc configuration with sudo cme check lcdproc* edit the configuration with a GUI (see Managing Lcdproc configuration with cme for more details) Here s a screenshot of the GUI:

More information

* libconfig-model-lcdproc-perl package page. This package provides a configuration model for

More information

* libconfig-model-lcdproc-perl package page. This package provides a configuration model for lcdproc.* This blog explains how this model is generated from upstream

LCDd.conf.* How to adapt a package to perform configuration upgrade with Config::Model Next steps Automatic configuration merge can be applied to other packages. But my free time is already taken by the maintenance of Config::Model and the existing models, there s no room for me to take over another package. On the other hand, I will definitely help people who want to provide automatic configuration merge on their packages. Feel free to contact me on:

* config-model-user mailing list

* debian-perl mailing list (where Config::Model is often used to maintain debian package file with

cme)* #debian-perl IRC channel All the best

Tagged: config-model, configuration, debian, Perl, upgrade

A couple of weeks ago,

A couple of weeks ago,  I was pretty knackered today. I should have done some Debian stuff, but I just

didn't have it in me, and I had a backlog of household stuff to get done.

Zoe woke up at 4am, and I didn't have the energy to try and get her to go back

to sleep in her bed, so I just let her sleep in my bed. We both slept in until

7am, which was nice.

As a result, we didn't have as much time to have a slow start, and Zoe was a

bit grumpy and uncooperative as a result. I think there was at least one

meltdown before breakfast.

It conveniently rained around the time we were ready to leave, so we drove to

Kindergarten. Drop off was super smooth. She pretty much waved me off as soon

as we got there.

I picked up a couple of packages from the post office on the way home, and then

started hanging out the washing before I had to go back to see the podiatrist

to get my orthotics fitted in my new running shoes. I finished hanging out the

washing and putting away stuff from the Coochie trip and Melbourne trip and had

some lunch.

After lunch I started work on making a little step for Zoe so she can turn the

light off in her bedroom. I had enough material left over from the clothes

lines I made for her to make a really dodgy little "stool".

It rained again around pick up time, so I drove to Kindergarten again, and

picked up Zoe. She'd just woken up from a nap before I got there and was in a

good mood. Megan's Dad was picking up Megan on foot, and they were going to

have a coffee at the local coffee shop, so we joined them.

After that, we went to the post office to check my post office box. I had a

cheque that needed to be banked, so we went to the bank and the supermarket,

and by then it was pretty much time for Sarah to pick up Zoe from me.

I did a bit more carpentry before I lost the light, and went to yoga.

I was pretty knackered today. I should have done some Debian stuff, but I just

didn't have it in me, and I had a backlog of household stuff to get done.

Zoe woke up at 4am, and I didn't have the energy to try and get her to go back

to sleep in her bed, so I just let her sleep in my bed. We both slept in until

7am, which was nice.

As a result, we didn't have as much time to have a slow start, and Zoe was a

bit grumpy and uncooperative as a result. I think there was at least one

meltdown before breakfast.

It conveniently rained around the time we were ready to leave, so we drove to

Kindergarten. Drop off was super smooth. She pretty much waved me off as soon

as we got there.

I picked up a couple of packages from the post office on the way home, and then

started hanging out the washing before I had to go back to see the podiatrist

to get my orthotics fitted in my new running shoes. I finished hanging out the

washing and putting away stuff from the Coochie trip and Melbourne trip and had

some lunch.

After lunch I started work on making a little step for Zoe so she can turn the

light off in her bedroom. I had enough material left over from the clothes

lines I made for her to make a really dodgy little "stool".

It rained again around pick up time, so I drove to Kindergarten again, and

picked up Zoe. She'd just woken up from a nap before I got there and was in a

good mood. Megan's Dad was picking up Megan on foot, and they were going to

have a coffee at the local coffee shop, so we joined them.

After that, we went to the post office to check my post office box. I had a

cheque that needed to be banked, so we went to the bank and the supermarket,

and by then it was pretty much time for Sarah to pick up Zoe from me.

I did a bit more carpentry before I lost the light, and went to yoga.

Back in 2006, the compiler in Ubuntu was

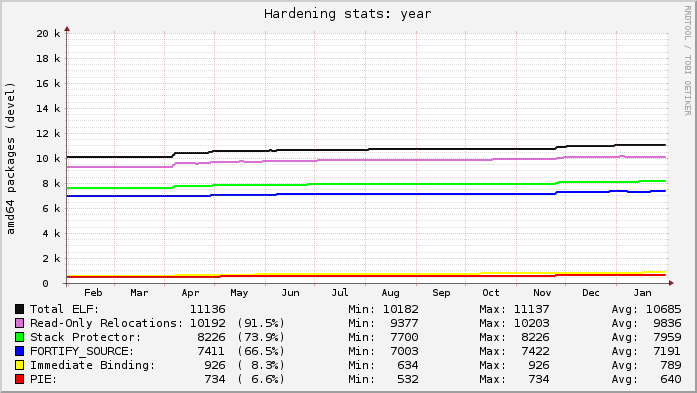

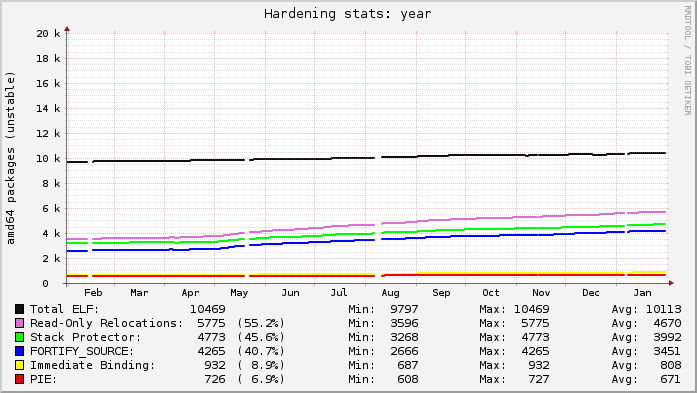

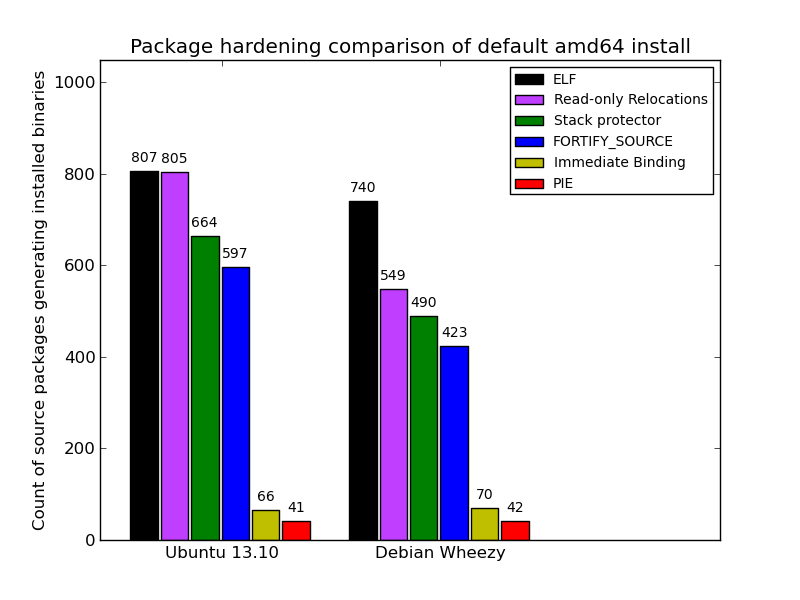

Back in 2006, the compiler in Ubuntu was  Here s today s snapshot of Debian s unstable archive for the past year (at the start of May you can see the archive unfreezing after the Wheezy release; the gaps were my analysis tool failing):

Here s today s snapshot of Debian s unstable archive for the past year (at the start of May you can see the archive unfreezing after the Wheezy release; the gaps were my analysis tool failing): Ubuntu s lines are relatively flat because everything that can be built with hardening already is. Debian s graph is on a slow upward trend as more packages get migrated to dh to gain knowledge of the global flags.

Each line in the graphs represents the count of source packages that contain binary packages that have at least 1 hit for a given category. ELF is just that: a source package that ultimately produces at least 1 binary package with at least 1 ELF binary in it (i.e. produces a compiled output). The Read-only Relocations ( relro ) hardening feature is almost always done for an ELF, excepting uncommon situations. As a result, the count of ELF and relro are close on Ubuntu. In fact, examining relro is a good indication of whether or not a source package got built with hardening of any kind. So, in Ubuntu, 91.5% of the archive is built with hardening, with Debian at 55.2%.

The stack protector and fortify source features depend on characteristics of the source itself, and may not always be present in package s binaries even when hardening is enabled for the build (e.g. no functions got selected for stack protection, or no fortified glibc functions were used). Really these lines mostly indicate the count of packages that have a sufficiently high level of complexity that would trigger such protections.

The PIE and immediate binding ( bind_now ) features are specifically enabled by a package maintainer. PIE can have a noticeable performance impact on CPU-register-starved architectures like i386 (ia32), so it is neither patched on in Ubuntu, nor part of the default flags in Debian. (And bind_now doesn t make much sense without PIE, so they usually go together.) It s worth noting, however, that it probably should be the default on amd64 (x86_64), which has plenty of available registers.

Here is a comparison of default installed packages between the most recent stable releases of Ubuntu (

Ubuntu s lines are relatively flat because everything that can be built with hardening already is. Debian s graph is on a slow upward trend as more packages get migrated to dh to gain knowledge of the global flags.

Each line in the graphs represents the count of source packages that contain binary packages that have at least 1 hit for a given category. ELF is just that: a source package that ultimately produces at least 1 binary package with at least 1 ELF binary in it (i.e. produces a compiled output). The Read-only Relocations ( relro ) hardening feature is almost always done for an ELF, excepting uncommon situations. As a result, the count of ELF and relro are close on Ubuntu. In fact, examining relro is a good indication of whether or not a source package got built with hardening of any kind. So, in Ubuntu, 91.5% of the archive is built with hardening, with Debian at 55.2%.

The stack protector and fortify source features depend on characteristics of the source itself, and may not always be present in package s binaries even when hardening is enabled for the build (e.g. no functions got selected for stack protection, or no fortified glibc functions were used). Really these lines mostly indicate the count of packages that have a sufficiently high level of complexity that would trigger such protections.

The PIE and immediate binding ( bind_now ) features are specifically enabled by a package maintainer. PIE can have a noticeable performance impact on CPU-register-starved architectures like i386 (ia32), so it is neither patched on in Ubuntu, nor part of the default flags in Debian. (And bind_now doesn t make much sense without PIE, so they usually go together.) It s worth noting, however, that it probably should be the default on amd64 (x86_64), which has plenty of available registers.

Here is a comparison of default installed packages between the most recent stable releases of Ubuntu (

Update: I apologise for spamming all the nice people on Planet Debian with this unrelated post in foreign language it got mis-tagged and (although I tried classifying it correctly and removing it from the separate Debian-only RSS feed going to the Debian Planet) Planet software just keeps it around until admins get a spare moment to remove it manually. The basic idea of the post is that there is a e-government service by the Latvian government that provides any citizen a way to check what their pension would be, but it is kinda useless, because it calculates what you pension would be if you decided to retire right now. So in the post below I provide a few simple steps on how to estimate what the real pension could be (in todays currency values) if a person kept paying into the system at the same rate until the normal retirement age.

Latvija.lv ir

Update: I apologise for spamming all the nice people on Planet Debian with this unrelated post in foreign language it got mis-tagged and (although I tried classifying it correctly and removing it from the separate Debian-only RSS feed going to the Debian Planet) Planet software just keeps it around until admins get a spare moment to remove it manually. The basic idea of the post is that there is a e-government service by the Latvian government that provides any citizen a way to check what their pension would be, but it is kinda useless, because it calculates what you pension would be if you decided to retire right now. So in the post below I provide a few simple steps on how to estimate what the real pension could be (in todays currency values) if a person kept paying into the system at the same rate until the normal retirement age.

Latvija.lv ir  * Somehow, I felt that there should be post for atleast to abuse Planet Debian in the new year of 2013. So, what s going on?

1. Finished Full Marathon aka Standard Charted Mumbai Marathon with pathetic timing of

* Somehow, I felt that there should be post for atleast to abuse Planet Debian in the new year of 2013. So, what s going on?

1. Finished Full Marathon aka Standard Charted Mumbai Marathon with pathetic timing of  when it s a prediction .

In the 4th January edition of the Guardian Weekly , the front page story,

entitled Meet the world s new boomers

when it s a prediction .

In the 4th January edition of the Guardian Weekly , the front page story,

entitled Meet the world s new boomers